Editor’s Note: This text course is an edited transcript of a live webinar. Download supplemental course materials.

Dr. Brent Edwards For the past 100 years or so, auditory research and clinical issues have focused on the auditory periphery. We have focused on audibility in the outer ear so that sound gets through to the damaged cochlea. We have tried to improve the speech-to-noise ratio of the sound coming to the outer ear. In terms of understanding the needs of the patient, designing hearing aid technology, and testing how well patients are doing with technology, tests of audibility have been the focus.

It has only been over the past 10 years that we have started to think about the more complex aspects of hearing. I want to give you a couple of examples that represent how hearing is much more complicated than audibility. In Scotland, Mitchell et al. (2008) performed an interesting experiment wherein they had subjects hold one arm in ice water, and they measured how long the person could hold it there before they had to remove it because it was too painful. Then they asked them to report subjectively how painful that experience was. They did this in three conditions. One was in silence, one was when they were playing a random piece of music, and another one was when they had people listening to music that they enjoyed. They found that the subjects were able to keep their arms under water longer in the third condition, while listening to music that they liked. They expressed less pain when asked afterwards how painful the experience was. How does what you hear affect your pain receptors and perception of pain? We are starting to understand that all aspects of perception are linked together, and hearing is linked in many ways to other things that we do and how we live our lives. It is because of the complex interaction within the cognitive system. This is where we are starting to focus our effort to understand that relationship.

Cognition and Hearing Technology

Lunner and Sundewall-Thorén (2007) researched the hypothesis that cognition could have an impact on the benefit people receive from hearing aid technology. They found a correlation between compression (fast or slow) and score on a cognitive test called visual letter monitoring (VLM). The people who had a higher level of cognitive functioning were able to get more benefit from fast compression than the people who appeared to have poor cognitive functioning. This suggests that perhaps the capabilities of the cognitive system dictated whether or not subjects were able to take advantage of things like fast-acting compression.

Additional research has shown that there is a correlation between benefit a subject experiences from advanced hearing aid technology with a similar measure of cognitive ability (McCoy, Tun, Cox, Colangelo, Stewart & Wingfield, 2005). This paper was a landmark publication in 2005. It opened people’s eyes to the relationship between hearing and other aspects of cognition. These researchers found that people with hearing loss had a harder time with memory tasks. These were tasks that had nothing to do with hearing. It seemed that the hearing loss was somehow affecting the person’s ability to store things in memory and recall things from memory. Why would hearing loss have any effect whatsoever on one’s memory ability? They should be unrelated. This is where we are starting to see the complex interaction between hearing loss and cognitive function. I will get to the reason why hearing loss could cause memory issues in a moment.

The Auditory System and Cognitive Function

Our auditory system has evolved over tens of thousands of years to function extraordinarily well, and it is exquisitely designed to process sound in very complex ways, but in an easy and natural way for all of us. In order for the cognitive system in the brain to do its job, it relies on a high-quality signal coming from the auditory periphery. If you do not have cochlear damage, then you are going to do just fine, and the brain will be able to process sound in the way nature intended - with a clean signal getting to the cognitive system. If, however, you have cochlear damage resulting in sensorineural hearing loss, then the signal getting to the brain is distorted and unnatural. It does not represent the kind of sound or signal that the brain has evolved to process. The brain has a harder time trying to interpret the signal from the auditory system, and it applies more resources to figure that out. It struggles. When it is struggles, it works harder. Because it is working harder, the whole cognitive system is not able to function as well. Other things that rely on the proper functioning of the cognitive system, such as memory, comprehension, and a variety of tasks, suffer because more resources are being spent on understanding what is being heard.

I liken this to the finely-tuned engine of a high-performance Ferrari that is designed a horsepower of over650. It has taken years and years of amazing engineering to make this the greatest driving system in the world; that is like our brain. However, if you take this incredibly finely-tuned system and feed it low-grade gasoline that contains dirt and other contaminants, it will not get the kind of input that it needs to function well. The performance of that Ferrari is going to turn into the equivalent of a K car. Both systems will still allow you to drive down the road. Both the Ferrari and K car will still get you from point A to point B, but one will do so in a much more effective and high-performance manner. This is like what is happening anatomically when you have a finely-tuned system, but it is not being driven and used in the way that it was designed. It will not function as well, and that is what is happening with the brain when you add hearing loss as the input to the auditory system.

We have learned from a decade of research that because of hearing loss, you get signal distortion travelling up the VIIIth nerve, and this can cause increased cognitive load, fatigue, poor memory, poor auditory scene analysis, difficulty in focusing your attention, and poor mental health.

Two questions that have been a focus for researchers in this field are, “How does hearing loss affect cognitive function?” and, “How can hearing aids affect cognitive function?” If hearing loss has a negative effect on cognitive ability, if it makes it worse or work harder, can hearing aids mitigate or reverse that effect? Can it make cognition easier? Can it make it more natural? Can it improve the functioning of the cognitive system? This is a critical question as we start to understand what the consequences of hearing loss are on cognition.

Communication

Communication is one of the most important aspects of someone’s life, and we know hearing loss can have detrimental effects. In terms of the impact of hearing loss, we focus mainly on simple reception of words in the research lab and in the clinic, such as those on the QuickSIN or HINT (Hearing in Noise Test). We use tests that are measures of audibility and we are focused on the auditory periphery. We do not test the communicative cognitive system, which includes listening, comprehension and reaction.

Think about what you do when you are sitting at a restaurant talking to a friend; it is not like the HINT, where you hear a sentence and then the only issue is whether or not you heard each word. You are processing a lot of things. You are focusing your attention on your friend and ignoring other people around you. You are expending effort to try to comprehend that person. You are following the context of what the person is saying. You are using grammar and linguistics to help you predict what words the person is saying or interpret what words you missed. You are also reacting intellectually and emotionally to what they are saying. You are storing things in memory and you are thinking about how you are going to respond next. You might also be multi-tasking. You could be reading a menu, thinking about your work schedule the next day, or scanning the room for the waiter. Communication is extremely complicated, and there is a lot going on in this simple act of communicating with another person.

We do not really think about all these other aspects of communication when we design our speech tests or hearing aid technology. It is imperative to consider the performance and functioning of people who are listening to speech in noise. All of that is changing, and we are now starting to think about the auditory periphery as well as the cognitive system when it comes to hearing loss.

Auditory Scene Analysis

Auditory scene analysis is an important aspect of auditory function. In fact, I think it is one of the reasons that people with hearing loss suffer in noise so much. The official definition of auditory scene analysis by Al Bregman (1990), who has done more research in this area than anyone, is “the organization of sound scenes according to their inferred sources.” I find it to be a little difficult to interpret. I have my own definition of auditory scene analysis, and it is the ability to understand what is going on in the world around you just by what you are hearing. Basically, from the auditory signal, you can know this scene of the world around you, what objects are around, and what the sound sources are. If you close your eyes now, I bet you could, without me talking, create a scene of the room that you are in. There might be some air-conditioning on in the background. There may be people walking in the hallway behind you. There may be the sound of the computer fan. There are a lot of sounds being generated, and just with your ears, you can tell a lot from the world around you. If you think about how we all walk down the street these days reading our iPhones, we are not necessarily looking at the world around us, but we can tell a lot about the world around us from our hearing. That helps us navigate the world. We do this naturally.

Why is this an important consideration? It is important because these are the cues that help us figure out what sound components are coming from which source. If you have three different people talking around you, you can use the different pitches of those voices to figure out the number of people in the conversation and which streams of sound are coming from which person. You use commonalities and harmonicity, onsets and offsets, common modulation, and spatial location. There are a variety of cues on which you rely in order to figure out which sounds are coming from which sound source. That is how you build up the image of the world around you.

My view is that this is important for people with hearing loss in complex listening situations. When we talk about speech in noise, we are talking about speech in complex situations, where there are multiple sound sources all around that interfere with the desired sound signal, presumably a person talking in front of you. Let’s take the typical cocktail party, where there are a lot of talkers in front of us. In a normal auditory system, those sounds first get muddled together at the entrance to the ear. You have to wonder how you could ever separate them out and listen to the one person you want to hear. Your auditory system does this automatically. You do not have to think about it. It creates these auditory objects by using auditory scene analysis cues (harmonicity, common onsets/offsets, modulations, spatial location or timbre). It does this seamlessly because our brain has evolved over tens of thousands of years to do this very effectively. Now you can create this auditory scene of multiple people, and you can choose to focus your attention on the person that you want to hear. We do this naturally, and we do not even think about it.

If you have hearing loss, you might have difficulty with one of the auditory scene analysis cues. Pitch, for example, might not be so salient with hearing loss. You are going to have a harder time creating that auditory scene. You are going to have a harder time separating the objects, and you are going to have a harder time selecting which person to whom you want to listen. Hearing aids could have the same effect. They could make this worse or they could make it better. Let’s say, for example, that hearing aids make spatial location not so salient. We know behind-the-ear hearing aids (BTEs) have this consequence because they eliminate the pinna effect. In that case, you could have an even more difficult time in complex auditory environments.

I remember when I first got into the hearing aid industry in the 1990’s, and Mead Killion said the best thing you could do in a noisy party is take your hearing aids out. That was because, at that time, hearing aid design was only focused on audibility. The hearing aids were causing some problems to some of these fundamental cues that the auditory system uses, and they were making it harder to understand speech in noise. Hearing is about a lot more than audibility; it is really about the complex functioning of the cognitive system that interprets the world and allows you to focus your attention and understand what it is that you want to hear.

Research Projects

The goals for our research are to investigate the impact of hearing aids and hearing loss on higher-order functions. In doing so, we found we needed to develop new ways of testing speech understanding in these complex situations, such as testing auditory scene analysis or testing the ability to switch attention from one talker to another. Again, this is something we do naturally, but it can be more of a challenge for people with hearing loss. We also needed to develop measures to determine the effect hearing aids have on cognitive function.

Let’s dive right in. I have five projects to present today, and I may not be able to cover all of them, but I hope to give you a taste of the things that we are working on. Hopefully, this will allow you to talk to your patients about what benefits they can expect to get from hearing aids beyond audibility and speech-to-noise ratio improvements.

Project 1: Listening Effort

This first project is on listening effort. The project was done in collaboration with University of California - Berkeley, which is one block from our research center in Berkeley, California. Our goal was to measure the effect hearing aids have on listening effort. This was driven by the observation that patients do not come in to an audiologist’s office and say things like, “I am having a harder time hearing high-frequency fricatives and low-level sounds.” They complain about not understanding in complex environments, and they also complain about being fatigued after being in a noisy situation for a period of time. If they go to a restaurant or a family dinner, after about half an hour, they tune out. They do this sooner than someone with normal hearing. There seems to be something about hearing loss that makes them mentally exhausted, and it causes them to withdraw from the conversation. That has multiple impacts, such as changes to their social interaction with the family and friends, and changes to their psychology. This was very important for us to figure out. Do hearing aids have any effect on listening effort? Can they lessen listening effort or make speech in noise less effortful for them to understand?

We looked at both the effect of noise reduction and directionality on listening effort. We did this with a standard cognitive science test called a Dual-Attention Task. This is a way of measuring effort. If you want to measure how much effort someone is spending on a task, have them do two things at the same time. You have them do the task you want to measure, but then you have them do a second task. Their performance on the second task is an indicator of the effort on the primary task. Our cognitive resources are limited. The brain only has a certain amount of resources it can allot to everything at once. If the primary task takes up a certain amount of that effort, you only have so much left to do everything else. But if the primary task does not take too much effort, then you have enough for that secondary task, and you can do well on it. However, if the effort on the primary task increases, it takes up more of the total resources, and you have less available for that secondary task, and you will do poorly on that task.

An example of this would be if I wanted to measure how much effort you were spending reading a magazine. One way I could do this is to have you read a magazine while, at the same time, watching a football game on TV. At the end of the hour period, I would ask you questions about the football game. If the primary task was easy, for example, you were reading People magazine, then you would have a lot of cognitive ability to pay attention to the football game. When I quizzed you on what happened in the game, you would score quite well. The high score on that football quiz would tell me it only took a small effort for the reading.

But let’s say you were reading a scientific journal, such as Ear and Hearing. That takes a lot more effort. You will have fewer resources available to watch the football game. When I quiz you on the football game, you will score poorly. That poor score on the football game tells me that you were spending a lot of effort on the reading. The score on the secondary task is an indicator of effort on the primary task. The poorer you do on the secondary task is an indication of greater effort on the primary task. That has been the theory for the past several decades of this approach in cognition, and that is why we chose to do this.

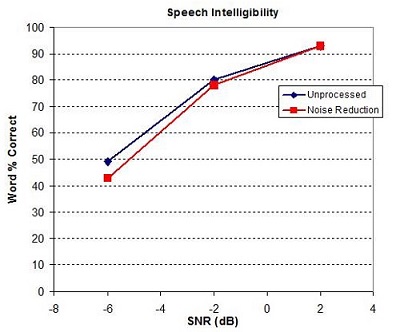

We had subjects do a visual task while they were also doing a standard speech-in-noise task. We measured the reaction time on the visual task. If their reaction time got worse, that means the effort on the speech-in-noise task increased. If their reaction time got better, that means their effort on the speech-in-noise task went down. The results are shown in Figure 1.

Figure 1. Speech intelligibility as measured by percent correct in varying speech-to-noise ratios. Blue= unprocessed signal through the hearing aid; Red= signal processed with Voice IQ noise reduction.

On the x-axis is the speech-to-noise ratio, and the y-axis is word recognition in percent correct. The blue line is the signal with no noise reduction and red is with a noise reduction algorithm, Voice IQ in our case. There were no statistically significant differences here between the unprocessed and processed signals. Noise reduction typically does not affect speech understanding, and it did not in this experiment. However, as we improve the speech-to-noise ratio, word recognition increased. We selected increments of 4 dB speech-to-noise ratio because that is about the improvement that you get with the directional microphone. Each increment is the equivalent from going from an omnidirectional to a directional mode. It is no surprise that, as you add a directional microphone effect, word recognition increases. This data was not new.

Reaction Time

Let’s look at the reaction during a speech-in-noise task. First, with the noise reduction off, the reaction time improved as the speech-to-noise ratio got better. The fact that the subjects got quicker in the visual task meant that they were doing better on the reaction-time task, and that meant the speech task got easier for them. This is probably no surprise. As speech intelligibility gets better, it becomes less effortful. This is the first time that anyone had ever objectively demonstrated this with a classic cognitive experiment. We were happy to get this result.

When we looked at the effect of noise reduction, we did see something unexpected. Because noise reduction does not improve speech understanding, we did not expect there to be any impact of noise reduction on listening effort. We found in the most difficult listening situation that noise reduction significantly made the visual task easier (Sarampalis, Kalluri, Edwards, & Hafter, 2009). The reaction times dropped. That meant that the speech-in-noise task got easier. This improvement in the reaction time tells us that in the most difficult listening situations, noise reduction had an objective benefit of making speech understanding easier for some reason. Even though speech understanding did not get better, the listening effort improved. This is the first time anyone has shown that noise reduction has an objective benefit beyond simply improving sound quality. I think this is important.

Features in hearing aids, such as directionality, noise reduction and perhaps frequency lowering, provide benefit that cannot only be measured with speech scores. Over time, the cognitive system is going to function more naturally, and the situation will be less effortful. What does it mean if the situation is less effortful? It means that the cognitive system is working less hard. It has more resources to do other things. Memory will improve. We have other experiments that show that. The ability to dual-task will improve. The ability to comprehend and follow complex aspects of communication will improve, and hopefully fatigue will not be as much of a problem. I will show you some evidence of that later on as well.

This result was confirmed by Desjardins and Doherty (2014) in an Ear and Hearing paper. They also took our Voice IQ noise reduction with hearing-impaired subjects and showed that when you went from a no noise reduction condition to a noise reduction condition, the listening effort was decreased. This has now been replicated by several people, and it is something that we feel confident can be relayed to patients in terms of benefit that they should expect to receive from technology.

One interesting side note of what we found is that the reaction time dropped, even when the speech scores were already at 100% (Sarampalis, et al., 2009). At +2 dB speech-to-noise ratio, subjects were already understanding everything, but they received a benefit of reduced effort when they went from some noise to no noise. Even in situations where people are understanding at 100%, there is still benefit from the technology by reducing the background noise. This was a new finding in 2009 and is also something that several other researchers have verified since. I think we can comprehensively say now that hearing aid technology in the form of noise reduction and directional microphones, but presumably some other technology as well, will have the impact of reducing listening effort for subjects. It has all of these consequences of the cognitive system functioning more naturally.

Project #2: New User Acclimatization

We expanded this research in collaboration with some researchers at University of Manchester in England to look at the effect of new hearing aid wearers and listening effort (Dawes, Munro, Kalluri, & Edwards, 2011). We used the same protocol, and we measured listening effort for speech in noise when they were first fit with their hearing aid. Then we had them come back 12 weeks later and measured listening effort again to see if there was any change. What we found was a change in their reaction times after 12 weeks compared to when they were first fit. Basically in all conditions, there was a trend for the reaction time to drop. There was no change in the control group, which shows that the effect we found was not due to people simply getting better at the protocol.

The researchers also used a self-assessment questionnaire (the Speech, Spatial, and Qualities of Hearing (SSQ)) that includes a subscale on listening effort. The subjects recognized that the effort was dropped over the first 12 weeks of hearing aid usage. We can also say with new hearing aid wearers that they will experience an improvement in listening effort, just perhaps not when you fit them right away. In fact, some of our pilot research suggests that effort might go up the first time that they are fit with hearing aids because they are experiencing new sounds they have not heard before. However, over at least 12 weeks of time, the effort that they take to understand speech in noise will drop. This is something that they will notice. This is also a good message for new hearing aid wearers, that even when they put a hearing aid on for the first time, they may feel a little bit overwhelmed with all of the new sounds they are hearing, but research shows the amount of effort to understand speech will improve over time.

Project #3: Fatigue

We wanted to look at the issue of fatigue. We did some collaboration with Ben Hornsby (2013) at Vanderbilt on this. The key here was the issue that after listening for an extended period of time, your brain gets exhausted. People with hearing loss struggle more with listening effort, and therefore, they get fatigued faster. We wanted to see if hearing technology could make patients less fatigued. Would they be able to participate in those social situations for longer period of time not withdraw so often?

Dr. Hornsby did some clever experiments. In one, he put subjects with hearing loss in a reverberation chamber with babble coming from all around and speech from the front. He had the subjects not only do a standard speech test where they had to repeat the words, but he had them memorize words as well. At the end of several sentences, they had to repeat the words that they had heard. Then he also had them do a visual task, which became a measure of effort. He had subjects do this for an hour straight. He measured how well they did on the visual task over the course of that hour. If the subjects became fatigued, their performance would get worse over the course of that hour. If, however, fatigue was not an issue, then performance would not degrade over the hour period.

The results are as follows. Time was plotted in 10-minute increments over the course of the hour. Reaction time was measured as a percent change from the baseline-reaction time at each 10-minute interval. This reaction time was sort of a relative measure. It was noted that reaction time gets worse over the course of that hour when people do not wear hearing aids. Dr. Hornsby then repeated the experiment with the same subjects when they were wearing hearing aids, and the reaction time stayed more consistent. First of all, the reaction time dropped right away at the beginning. That is the indication that hearing aids, right away, will reduce effort, which we have already seen. The slope of the graph was flat, showing no change in effort over time.

This is very strong evidence that hearing aids will reduce the fatigue from listening to speech in noise over an extended period of time. Behaviorally, what we would expect to see is people with hearing loss would be more engaged in the social situations. They would continue to interact with their family, and that could have bigger effects in terms of their social isolation, psychology, and physiology that can result from social withdrawal. I will talk about that at the very end with respect to some recent research on dementia.

Our conclusion is that hearing aids can reduce mental fatigue with all the benefits that come with them. I have heard of patients’ significant others coming back to the audiologist and thanking them for bringing their spouse back to life, saying that they engage more with their family, and they are interacting more like they used to when their hearing was better. I think all of these results are not surprising to any of you, because you all have some intuition that all of this is true. What we are doing here is developing the evidence to support this notion, which is important for a lot of reasons. It confirms what many of us have believed, and it also provides evidence to government organizations so we can create more awareness of the problems for people with hearing loss and make it a higher-agenda issue.

Project #4: Binaural Hearing

Next I want to talk about an experiment that we did looking at the effect of binaural hearing (spatial hearing) on listening effort. We wanted to understand if improved spatial perception has an effect on listening effort. We are starting to talk about spatial hearing in the hearing aid world and in hearing science in general. We know that people with hearing loss do have some deficits in spatial hearing, and we are starting to develop technology to improve spatial hearing and localization. In some parts of the world, people are often only fit with one hearing aid. They are not getting good audibility from both ears and are being kept at a deficit in terms of their spatial hearing. We wanted to know the impact of that on the patient’s cognition. Obviously, there is some impact on your ability to localize sound, but what is the impact on the workings of the cognitive system?

Binaural function is more than localization. It has a huge impact on many aspects of auditory perception. Some of those are better-ear listening, auditory scene analysis capability, binaural hearing, echo perception or the ability to ignore echoes, and binaural squelch, which is reduction of certain types of noises. The binaural system has a significant impact on auditory perception that we do not think about. We are focused specifically on listening effort.

For this study, we used a dual-attention task with eight normal-hearing adults. We had the subjects in four conditions. There was always a target talker and two interfering talkers in this task. In one condition, the talkers all came from the same location in front of the listener, and they were all female. In another condition, the talkers were still in front of the listener, but the target was female and the interferers were male. In another condition, they were all female but they were spatially separated. In the final condition, we had the full mix of female target but male interferers and spatial separation.

Two of the different cues that are used for auditory scene analysis are pitch, spatial location, and we were trying to see what the effect of listening effort was on these. We were particularly interested in going from the male-female collocated condition to the spatial condition. There was some evidence that if you have the pitch cues, you do not need the spatial cues. That was part of our investigation.

The secondary task in which we had subjects engage was a visual task. We had a dot that moved around a screen. While they were doing the speech-in-noise test, they had to move a mouse controlling a red ring and keep it on top of the dot. The percentage of time that the red ring was on top of the dot was our indication of their performance on the secondary task.

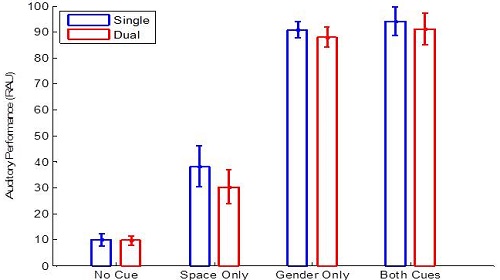

Figure 2 shows the data. These are speech scores in those four conditions. If there were no cues and all female talkers, subjects did poorly; only 10% were correct. If we spatially separated the signal and competing talkers, but they were all still female, they improved a little. If we kept the signals collocated, but they were all female, performance sky-rocketed to near 100%. If we also took that male-female condition and added spatial separation, there was no difference in speech understanding.

Figure 2. Auditory performance in four listening conditions: no cues, all female competing talkers that were spatially separated, all female competing talkers with no spatial separation, and male-female talkers that were spatially separated.

The secondary task was the measure of effort. What was most interesting is in both of these conditions the subjects were up at 100% speech understanding. If we look at Figure 2, you would say that there is no benefit in adding the spatial cue when you have the male-female pitch difference. However, there is a difference in listening effort. Although there was no improvement in speech understanding, there was a significant improvement in listening effort by adding those spatial cues. We have used this paradigm in other conditions and the story remains the same. Spatial cues, even if they do not add anything to speech understanding are important for the cognitive system to do its job more efficiently. This tells us that it is worth spending the time to develop technology that will improves spatial hearing.

A lot of people might think that patients do not need more than one hearing aid; this is not as prevalent in the United States. However, there is plenty of evidence that you get significant benefit from hearing with both ears. Fitting people with two hearing aids is valuable in order to provide good spatial hearing, good auditory scene ability, and it has the impact of reduced listening effort. If you ever doubt that and have normal hearing, the next time you are at a party, plug one ear and see how well you are able to focus your attention on different talkers around you. It is much easier to do than when you have both ears open. You still might understand perfectly with one ear plugged up, but it will be a lot more effortful for you. We are building up this body of evidence that all of these aspects of auditory perception have these complicated relationships to the brain, far beyond issues around audibility or speech-to-noise ratio.

Project #5: Semantic Information Testing

The last experiment I want to talk about before I get into the issue of dementia is a new test that we are developing in conjunction with University of California – Berkeley. It is getting at the issue that communication is more complicated than just audibility. Usually you have multiple talkers, and you need to separate them to understand what they are saying. You might switch your attention from one talker to another. It is not enough to only hear the word or the words in the sentence. There is a lot going on, and we wanted to develop a test that represents multiple talkers at the same time, switching of attention from one talker to another, and whether or not the meaning of what is said is understood. This is different than the number of words correct in a sentence.

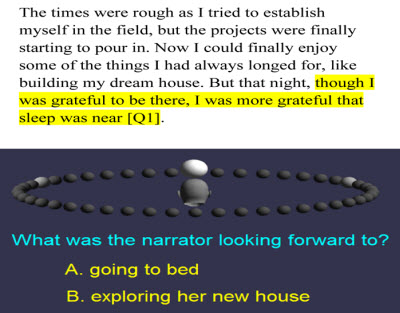

Here is what we have developed. The test has running dialogue coming from one or more speakers. We have taken books-on-tape and journal articles and recorded them. There is a screen in front of the subject in our laboratory. Every now and again, after they hear a bit of information, we will pop a question up on the screen and ask them if the answer is A or B, but the answer will be phrased in a way that it does not use the same words as what they heard. Look at the example in Figure 3.

Figure 3. Semantic tracking example, where a question is posed and the listener must answer A or B.

In this example, “though I was grateful to be there, I was more grateful that sleep was near,” the question was, “What is the narrator looking for? A: Going to bed, or B: Exploring her new house.” The word bed was not used here anywhere, but if you understood what the person was saying, then you would know the answer is A. We call this semantic information tracking because it is about getting the meaning of the information. This is different than a classic test such as the HINT or even high-context SPIN (speech-in-noise) sentences where the task is to repeat words of a whole. Our task focuses on comprehension. That is the difference between semantic and phonetic. An example is the phrase, “She had been instinctively nervous.” A phonetic question about that statement would be, “Was she instinctively nervous?” A semantic question would be, “Was she easily startled?”

We tested subjects both phonetically or semantically on what they heard. We had subjects with three streams coming at them at different locations, and we would use a light to indicate where the talker is coming from. We would get a measure of how quickly they were able to switch their attention from one talker to another. We did that in both the semantic and phonetic conditions. It turns out that with the semantic approach, it takes about twice as long for subjects to switch their attention in the semantic task than it does for the phonetic task. This means they were more easily able to capture the words that were said from another talker, but had a harder time capturing the information of what was being said. That is what happens in the real world. We are not just trying to capture the words; we are trying to capture the meaning of what people are saying. We believe this test is more representative of the difficulties that people have in the real world and can get at the challenges of understanding, as opposed to only the audibility of words, which is the vast majority of our speech tests today.

I think this task engages the cognitive system more because it is not only about semantics. There are multiple talkers at the same time, you need to do your auditory scene analysis, and then you are switching and refocusing attention. I think we are going to see more experiments and tests like this in our clinics that will get more at real-world scenarios.

Finally I want to talk about two papers from Frank Lin at Johns Hopkins University, which have been getting a lot of press recently (Lin, Metter, O’Brien, Resnick, Zonderman, et al., 2011; Lin, Yaffe, Xia, Xue, Harris, et al., 2013). I wanted to summarize the results, what they mean, and what can be conveyed to patients.

If you have two groups of people who are identical in all ways in terms of age, lifestyle, et cetera, except one group has hearing loss and one has normal hearing, then you measure how their cognitive function changes over time. Lin found that people who had hearing loss at the start of this investigation had a significantly increased chance of reduced cognitive function after 6 to 11 years. He finds that the decline of cognitive ability is anywhere from 33% to 42% faster if someone has hearing loss than if someone has normal hearing. The suggestion around this is that hearing loss can cause social isolation, and social isolation can lead to depression and other psychological consequences that do have an effect on cognitive function. That is a hypothesis.

We know for a fact that there is a link, and if you have hearing loss, you are more at risk for cognitive decline and dementia. However, it could be that hearing loss is not the cause, but that there is another common cause. For example, it could be a poor vascular system that is not only causing poor functioning of the ear, but poor functioning of the brain, and so the hearing loss is not responsible for the cognitive decline. On the other hand, it could also be that the hearing loss is the actual cause of this cognitive decline, through social isolation that results from hearing loss and subsequent deficits to the patient. If it is number two, then there is the possibility of treating this. So the real question is if you fit subjects with hearing aids, will it reverse the effect? Will it stop this decline of cognitive function? If number two is true, that hearing loss is the cause of cognitive decline, there is a possibility that hearing aids can reverse the effect. If number one is really what is going on, then there is no possibility of hearing aids reversing cognitive decline.

Hopefully, after discussing all of this research, you can see that hearing aids can have positive effect on cognitive abilities. It can counteract some of the negative aspects of hearing loss and cognitive function. In the future, I think we will be seeing hearing aids that are not only designed to improve audibility and speech understanding, but to improve cognitive function such as memory and ability to multi-task and comprehension. Along the way, we will be developing new diagnostics and new outcome measures to try to better understand the individual needs of patients, their cognitive needs, and to better test the impact of hearing loss and hearing aids on their cognition.

Question and Answer

Will similar research be used with children with hearing loss?

Some people are looking at the pediatric aspects of what I am talking about. We do not do pediatric research in our lab, but from other research with children the issues of listening effort and cognition are absolutely the same. Those researchers are going to have to develop different tasks, though, because the tasks we use are more appropriate for adults than for children. I do believe the issues are the same, though.

Can the use of a system like LACE help with reducing the risk of dementia?

That is a terrific question. There is some evidence that programs like that do have cognitive benefit. There are companies, such as Posit Science and Luminosity, that have some peer-reviewed publications that suggest that their own branded tests can provide improvements in cognitive ability. We still do not quite know yet, but there is evidence that some of these tests are beneficial. I think the challenge is getting people to use them on a regular basis.

Are there adequate outcome measures? Do you have any recommendations?

We are trying to develop better outcome measures. I would say to hold on. I think some of the questionnaires being developed, such as the SSQ, might be possible. There are probably some things that we can do in our laboratory as well, but those are still under development. It is definitely a need, though. Unfortunately, I cannot make any recommendations right now. I think making sure that patients are aware of these benefits and questioning them when they come back if they have noticed any improvements is a start. You should question their significant others as well, as they will probably notice more than the hearing aid wearer themselves.

In terms of listening effort dual-task tests, was there any effect from general increased age?

We found that there was greater variability in the scores with age, probably because of reduced dexterity and things like that. We did not see any trends in the effect as a function of age, whereas if someone was older or younger, than they got more or less benefit. The data was more variable for us. That does not mean that they are not getting the same benefit, though. One thing we do know is that there is a general reduction in cognitive ability due to age. If you look at someone who is young and someone who is old on these measures, the old person will inherently do worse because of cognitive decline from age. However, that does not necessarily mean that they will not get the benefit to cognition from hearing aid technology. Generally, cognition declines with age, and so you do see a reduction in cognitive scores when you compare older people to younger people.

The symptoms you describe hearing aids helping are typical of one of my patients with normal hearing. Could hearing aids help her? I notice if I speak louder and slower to her, she understands better.

That is a great question. I was just at a meeting with the heads of research from the other major hearing aid companies, and this topic came up. We are interested in this exact question for people with normal hearing. Someone used the term “hidden hearing loss,” suggesting that they may have hearing difficulty that is not captured by the audiogram for some reason. We are going to be issuing an RFP (request for proposal) for grant proposals to get at this issue. There is evidence out of Mass Eye and Ear and the Harvard Medical School in Boston that noise exposure can damage up to 50% of your auditory nerve without showing any effect on your audiogram. You could be walking around thinking you have normal hearing and half of your auditory nerve is not working anymore. We need ways of identifying these subjects. Will they benefit from hearing aids? I do not know. As you say, speaking more slowly will help and speaking louder will improve speech-to-noise ratios, and technologies including directional microphones and noise reductions will potentially help. Amplification probably will not. But this is purely speculation. I do not think there has been any published evidence on that. I think we will see heightened focus on this issue as we try to understand it better, seeing if technology can help.

References

Bregman, A. S. (1990). Auditory scene analysis: the perceptual organization of sound. MIT Press, Cambridge: MA.

Dawes, P., Munro, K., Kalluri, S., & Edwards, B. (2011, June). Listening effort and acclimatization to hearing aids. Paper presented at the International Conference on Cognition and Hearing, Linkoping, Sweden.

Desjardins, J. L., & Doherty, K. A. (2014). The effect of hearing aid noise reduction and listening effort in hearing-impaired adults. Ear and Hearing, March 11. Epub ahead of print.

Hornsby, B. W. Y. (2013). The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear and Hearing, 34(5), 523-534. doi: 10.1097/AUD.0b013e31828003d8

Lin, F. R., Metter, E. J., O’Brien, R. J., Resnick, S. M., Zonderman, A. B., & Ferrucci, L. (2011). Hearing loss and incident dementia. Archives of Neurology, 68(2), 214-220. doi: 10.1001/archneurol.2010.362.

Lin, F. R., Yaffe, K., Xia, J., Xue, Q. L., Harris, T. B., Purchase-Helzner, E., et al. (2013). Hearing loss and cognitive decline in older adults. JAMA Internal Medicine, 173(4), 293-299. doi: 10.1001/jamainternmed.2013.1868.

Lunner, T., & Sundewall-Thorén, E. (2007). Interactions between cognition, compression, and listening conditions: effects on speech-in-noise performance in a two-channel hearing aid. Journal of the American Academy of Audiology, 18(7), 604-617.

McCoy, S. L., Tun, P. A., Cox, L. C., Caolangelo, M., Stewart, R. A., & Wingfield, A. (2005). Hearing loss and perceptual effort: downstream effects on older adults’ memory for speech. The Quarterly Journal of Experimental Psychology, A Human Experimental Psychology, 58(1), 22-33.

Mitchell, L. A., MacDonald, R. A., & Knussen, C. (2008). An investigation of the effects of music and art on pain perception. Psychology of Aesthetics, Creativity, and the Arts, 2(3), 162-170. doi: 10.1037/1931-3896.2.3.162

Sarampalis, A., Kalluri, S., Edwards, B., & Hafter, E. (2009). Objective measures of listening effort: effects of background noise and noise reduction. Journal of Speech, Language and Hearing Research, 52, 1230-1240.

Cite this content as:

Edwards, B. (2014, July). Starkey Research Series: how hearing loss and hearing aids affect cognitive ability. AudiologyOnline, Article 12832. Retrieved from: https://www.audiologyonline.com